Computational complexity is a field of computer science that focuses on classifying and analyzing the efficiency of algorithms and problems. It deals with understanding how the running time or resource requirements of an algorithm grow as the size of the input data increases. This analysis helps in comparing and selecting algorithms for solving specific problems and understanding the inherent difficulty of certain computational tasks.

Computational complexity theory plays a crucial role in computer science, helping researchers and practitioners understand the limitations and possibilities of algorithms. It helps in making informed decisions about algorithm selection, hardware design, and the feasibility of solving real-world problems within reasonable time and resource constraints.

The origins of complexity theory can be traced back to several foundational concepts and theses in computer science and mathematics, including Church’s Thesis and the Cobham-Edmonds Thesis.

❖ Church’s Thesis and Effective Computability:

Church’s Thesis, proposed by the American mathematician Alonzo Church in the 1930s, is a fundamental concept in the theory of computation. It asserts that any function that is effectively calculable can be computed by a Turing machine (or equivalently, by a recursive function). In other words, if there is an algorithmic method to compute a mathematical function, that method can be simulated by a Turing machine.

FIG. Computational Complexity

⮚ Turing Machines: Alan Turing, a British mathematician, independently introduced the concept of Turing machines around the same time as Church’s Thesis. Turing machines are abstract mathematical models of computation that consist of a tape and a read/write head that can move left or right on the tape, following a set of rules. Turing machines are considered equivalent in computational power to the intuitive notion of an algorithm.

⮚ Effective Computability: The notion of “effective computability” refers to the idea that there exists a well-defined procedure or algorithm to compute a function. Church’s Thesis formalizes this concept, asserting that any effectively computable function can be computed by a Turing machine.

FIG. Rice’s theorem applies to Turing machines

❖ The Cobham-Edmonds Thesis and Feasible Computability:

The Cobham-Edmonds Thesis, named after the computer scientists Alan Cobham and Jack Edmonds, addresses the concept of feasible computability. It suggests that problems that can be solved by algorithms with “reasonable” resource usage are precisely the problems that can be solved efficiently by a Turing machine. In other words, it focuses on the notion of what can be computed in practice within resource constraints.

⮚ Feasible Computability: Feasible computability is concerned with understanding the computational problems that can be solved efficiently using limited computational resources, such as time and memory. It deals with the practical aspects of computation, especially in the context of real-world computing devices.

⮚ Complexity Classes: The Cobham-Edmonds Thesis laid the groundwork for the development of complexity theory, which classifies problems into various complexity classes based on their resource requirements. For example, P (polynomial time) represents problems that are efficiently solvable in polynomial time, while NP (nondeterministic polynomial time) includes problems for which solutions can be efficiently verified in polynomial time.

In summary, Church’s Thesis and the concept of effective computability provide a foundational understanding of computation and algorithms, while the Cobham-Edmonds Thesis extends this to the realm of practical and feasible computation, setting the stage for the development of complexity theory and the study of computational complexity. These theses have had a profound impact on the field of computer science and continue to shape our understanding of computation and its limits.

❖ Here are some key concepts and terms related to computational complexity:

A. Time Complexity: The amount of computational time an algorithm needs as a function of input size is measured by time complexity. Big O notation, such as O(n), O(n^2), is commonly used to represent it. This notation gives an upper constraint on the rate at which an algorithm’s running time grows with respect to the amount of the input.

B. Space Complexity: The amount of memory (RAM) needed by an algorithm as a function of input size is measured by space complexity. It is represented using big O notation (e.g. O(n), O(n^2)), just like time complexity.

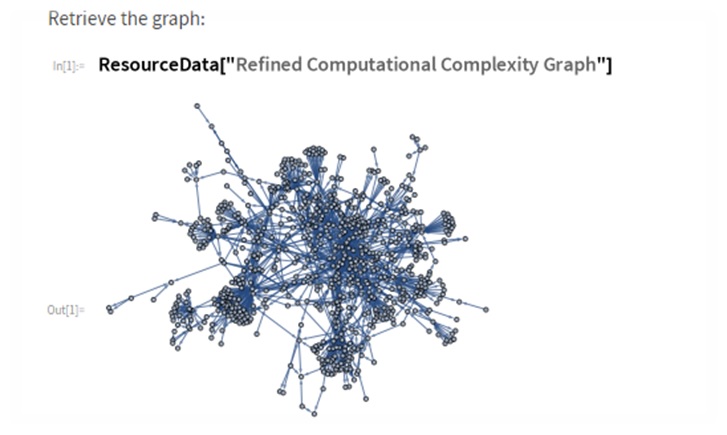

FIG. Graph of Computational Complexity for Link Analysis Ranking Experiments

C. P vs. NP Problem: The P vs. NP problem is among the most well-known issues in computational complexity theory. It poses the question of whether any problem that has a suggested solution that can be swiftly confirmed (in “polynomial time”) can also be resolved swiftly (in polynomial time). If P = NP, then many difficult issues, such as those pertaining to cryptography and optimization, should be amenable to efficient solution.

D. NP-completeness: A class of problems within NP (nondeterministic polynomial time) for which if any one of them is solved in polynomial time, then all problems in NP can be solved in polynomial time. These problems are called NP-complete, and they are believed to be inherently difficult.

E. Polynomial Time: Polynomial-time algorithms are regarded as efficient. This indicates that a polynomial function of the input size bounds their execution duration. Class P includes problems that have polynomial time solutions.

F. Exponential Time: Algorithms that run in exponential time grow rapidly with the input size. Problems with exponential-time algorithms are generally considered intractable for large inputs unless breakthroughs occur in algorithm design.

G. Complexity classes: Computational complexity theory defines various complexity classes to classify problems based on their difficulty. Some important classes include P, NP, NP-complete, co-NP, and more.

H. Approximation Algorithms: In some cases, it is not possible to find an exact solution to a problem efficiently. Approximation algorithms aim to find near-optimal solutions in a reasonable amount of time.

I. Reduction: Numerous complexity findings are determined by means of reduction methodologies. This entails converting a problem into another problem while maintaining the same level of difficulty. For instance, demonstrating that problem A belongs to the class of NP-complete problems can be done by using a reduction technique to show that it is equivalent to problem B, which is already established as an NP-complete problem.

J. Parallel Complexity: Analyzing how efficiently problems can be solved in parallel computing environments, where multiple processors or cores work together, is another aspect of computational complexity.

❖ Connections to Logic and Philosophy:

The field of computational complexity theory has deep connections to logic, philosophy, and various other areas of computer science and mathematics. Here are some of the key connections and related concepts:

A. Logic: Computational complexity theory is closely related to mathematical logic, particularly in the study of formal systems, proof theory, and the limits of provability. Many complexity classes are defined in terms of logical concepts, such as quantifiers and alternations.

B. Philosophy: The P vs. NP problem has philosophical implications. Its resolution would shed light on the nature of mathematical and logical truth, as well as the relationship between what can be verified and what can be found through search. Philosophers have explored the implications of computational complexity for epistemology and the philosophy of mathematics.

❖ Significance of P≠NP:

The question of whether P (problems efficiently solvable) equals NP (problems efficiently verifiable) is one of the most important and unsolved problems in computer science. If P ≠ NP were proven, it would imply that many computational problems, including some in cryptography and optimization, are inherently difficult to solve efficiently. This would have profound implications for information security, algorithm design, and decision-making in various fields.

❖ Satisfiability, Validity, and Model Checking:

A. Satisfiability (SAT): A basic issue in computer science and complexity theory is SAT. It entails figuring out if a particular Boolean formula can be satisfied by giving its variables truth values in a way that results in the formula being true overall. The first issue to be shown as NP-complete is SAT.

B. Validity: Validity is a logical concept that deals with whether an argument is logically sound. In computational complexity, determining the validity of logical formulas can be computationally expensive.

C. Model Checking: Model checking is a technique used in formal verification to verify whether a given finite-state model satisfies a specification expressed in temporal logic. It has applications in hardware and software verification.

❖ Proof Complexity:

Proof complexity is the study of the size and structure of mathematical proofs. It explores questions related to how efficiently we can prove statements and the trade-offs between proof length and proof strength. Proof complexity is closely tied to computational complexity through concepts like Cook’s theorem and the notion of self-reducibility.

❖ Descriptive Complexity:

Descriptive complexity theory investigates the relationship between computational complexity classes and logical formalisms. It aims to characterize complexity classes using logical properties of problems. Descriptive complexity connects complexity theory to classical first-order logic and other logical formalisms.

❖ Bounded Arithmetic:

Bounded arithmetic is a branch of mathematical logic that studies arithmetic theories with restricted quantifier alternations. It explores the relationship between logical theories and computational complexity classes like P and NP.

❖ Strict Finitism:

Strict finitism is a philosophical position that imposes strict limitations on what can be considered a valid mathematical proof. It has implications for the foundations of mathematics and the relationship between logic and computation.