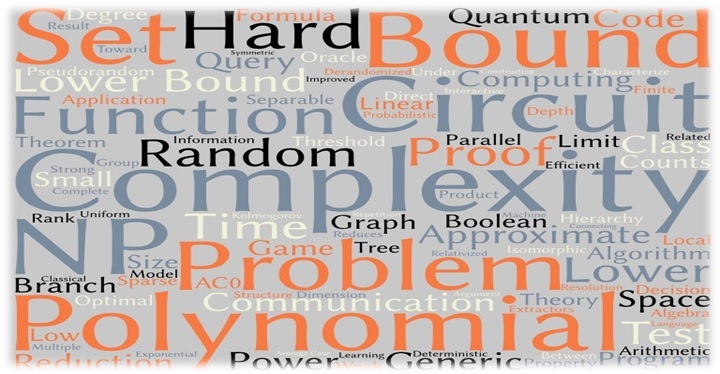

🔍 What is Computational Complexity?

Computational complexity is a core area of computer science focused on analyzing and classifying algorithms based on their efficiency. It studies how the time and space (memory) requirements of algorithms scale with the size of the input.

This field helps determine:

-

The practicality of solving a computational problem

-

Which algorithm is most efficient for a given task

-

Whether a solution is even feasible under current resource constraints

Understanding computational complexity is essential for algorithm design, software development, hardware optimization, and solving real-world computational problems.

🧠 Historical Foundations: Church’s Thesis & Cobham-Edmonds Thesis

1. Church’s Thesis (Effective Computability)

Proposed by Alonzo Church in the 1930s, Church’s Thesis states that any function that is effectively calculable can be computed by a Turing machine. This laid the groundwork for defining what it means for a problem to be computable.

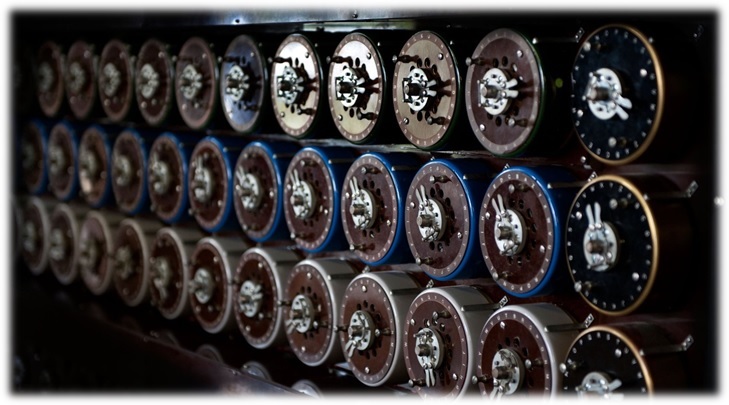

➤ Turing Machines

Introduced by Alan Turing, Turing machines are abstract models that simulate algorithmic logic. They consist of a tape and a read/write head operating under a fixed set of rules, capable of representing any algorithmic process.

➤ Effective Computability

This refers to a well-defined procedure that can be executed to solve a function or problem—essentially the basis of algorithm design.

2. Cobham-Edmonds Thesis (Feasible Computability)

Named after Alan Cobham and Jack Edmonds, this thesis explores what it means for a problem to be feasibly solvable—i.e., solvable in a reasonable amount of time and memory.

➤ Feasible Computability

This principle focuses on problems solvable with practical resource constraints, helping identify which algorithms are usable in real-world systems.

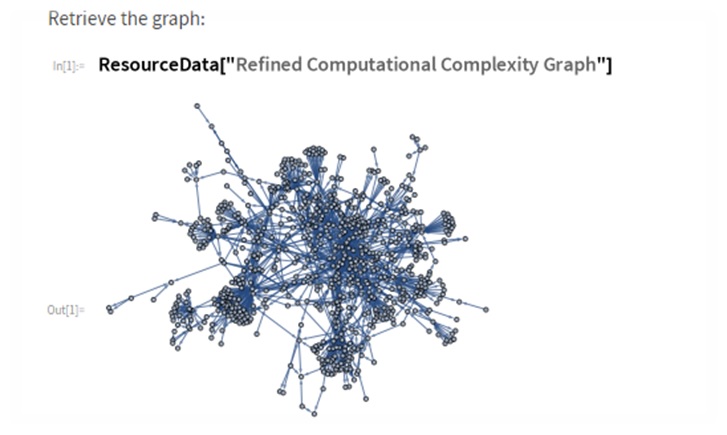

➤ Complexity Classes

The thesis introduced the idea of categorizing problems based on computational resources required. Major classes include:

-

P (Polynomial Time): Problems solvable efficiently

-

NP (Nondeterministic Polynomial Time): Problems whose solutions can be verified efficiently

-

NP-Complete: The most challenging problems in NP

⚙️ Key Concepts in Computational Complexity

A. Time Complexity

Measures how an algorithm’s running time increases with input size. Common notations include:

-

O(n) — linear time

-

O(n²) — quadratic time

-

O(log n), etc.

B. Space Complexity

Refers to the memory an algorithm uses as input grows, also expressed in Big O notation.

C. P vs NP Problem

This is one of the most critical unsolved problems in computer science. It asks:

“If a problem’s solution can be verified quickly (NP), can it also be solved quickly (P)?”

If P = NP, many hard problems (e.g., cryptography, optimization) would become solvable efficiently.

D. NP-Completeness

NP-complete problems are the hardest in the NP class. If even one of them can be solved in polynomial time, all NP problems can be solved efficiently.

E. Polynomial vs Exponential Time

-

Polynomial-time algorithms are efficient and feasible.

-

Exponential-time algorithms are generally impractical for large inputs due to their rapid growth.

F. Approximation Algorithms

When exact solutions are computationally expensive or impossible, approximation algorithms aim to provide near-optimal solutions within a reasonable timeframe.

G. Reductions

A technique used to prove the complexity of problems. If problem A can be transformed into problem B (already known to be hard), A is also considered hard.

H. Parallel Complexity

Analyzes how well problems can be solved using parallel computing, utilizing multiple processors for increased efficiency.

🧩 Connections to Logic, Philosophy & Mathematical Foundations

1. Logic & Formal Systems

Computational complexity is deeply rooted in mathematical logic and proof theory. Many complexity classes are defined using logical concepts such as quantifiers and alternations.

2. Philosophy & Epistemology

The P vs. NP problem has philosophical significance in understanding the nature of knowledge, verifiability, and logical truth.

🔐 Real-World Applications & Advanced Concepts

A. Satisfiability (SAT)

SAT is a fundamental NP-complete problem that asks whether there exists a truth assignment for variables that satisfies a given Boolean formula.

B. Validity

Determining if a logical argument is valid is computationally complex and crucial in automated reasoning systems.

C. Model Checking

Used in formal verification, model checking ensures that systems (like software or hardware) meet specific correctness criteria.

📘 Advanced Theoretical Topics

1. Proof Complexity

Studies the structure and size of proofs, linking the efficiency of computation to the strength and length of logical arguments.

2. Descriptive Complexity

Explores how logical languages (like first-order logic) can define complexity classes.

3. Bounded Arithmetic

Examines restricted logical theories and their relationship to complexity classes such as P and NP.

4. Strict Finitism

A philosophical view questioning the validity of infinite mathematical objects—relevant when defining computation with finite resources.

✅ Conclusion

Computational complexity offers a deep understanding of what can and cannot be computed efficiently. It helps us:

-

Design better algorithms

-

Set realistic expectations for problem-solving

-

Understand the limits of computation and logic

As computer science advances, complexity theory continues to guide our understanding of both theoretical and practical computation.

If you’re passionate about problem-solving, algorithms, or logic, studying computational complexity can open up exciting academic and research opportunities in areas like artificial intelligence, cybersecurity, and data science.